Understanding and Controlling Information Flow in Language Models

Understanding and Controlling Information Flow in Language Models

Interpreting and controlling large language models (LLMs) represents a central challenge in current AI research. A deeper understanding of the inner workings of these models is essential to better predict and control their behavior. A promising approach lies in analyzing the information flow, meaning the development and transformation of features within the neural network. A recently published paper presents a new method to visualize this flow and make it usable for the targeted control of LLMs.

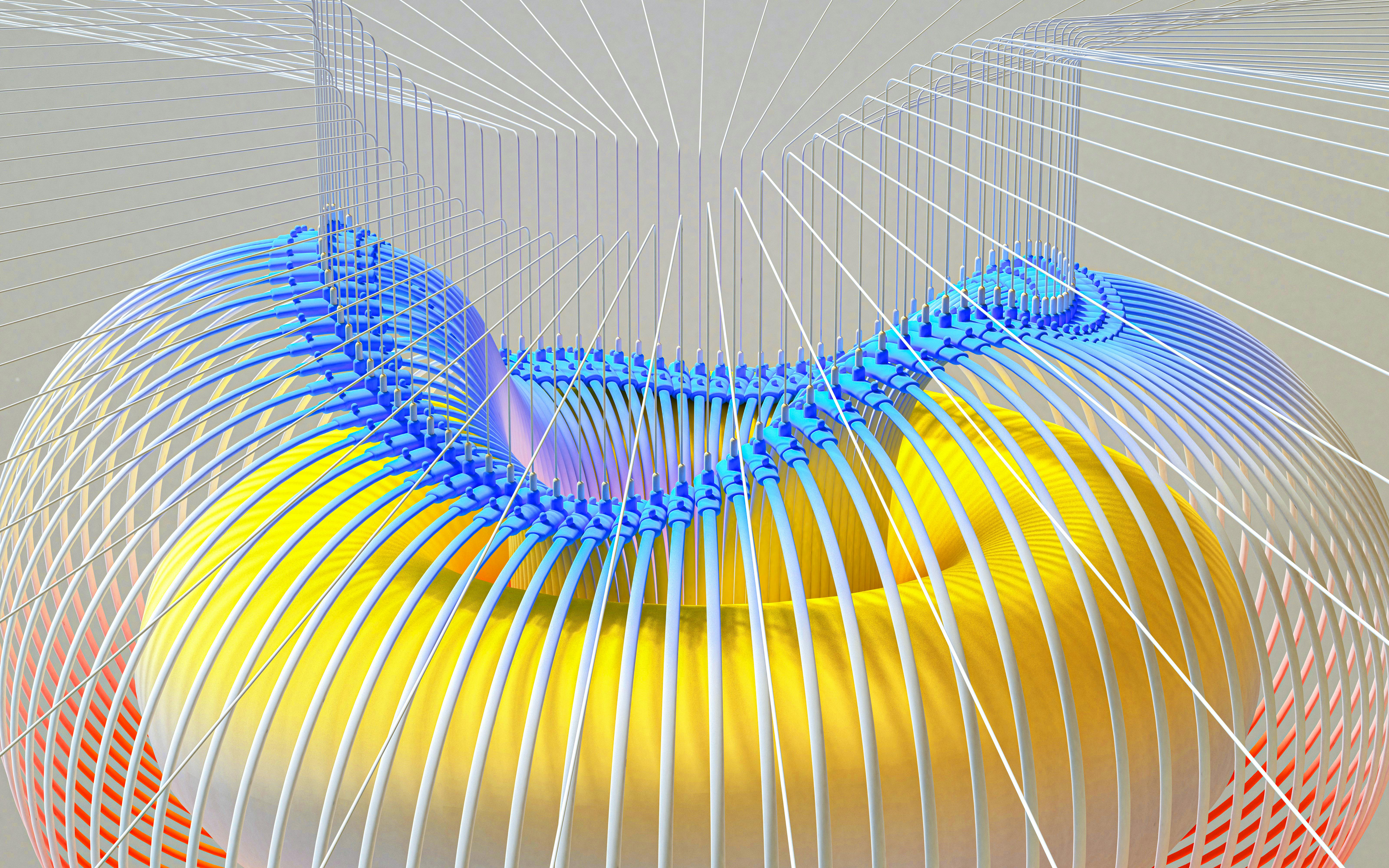

The presented method is based on the use of Sparse Autoencoders (SAEs), which serve to identify relevant features in the different layers of the language model. Instead of using training data, the researchers rely on a data-free method that calculates the cosine similarity between the features in successive layers. This makes it possible to track how certain features change during processing – whether they persist, are transformed, or newly emerge. The result is a detailed representation of the information flow in the form of so-called "flow graphs".

These flow graphs offer new insights into the workings of LLMs. They enable a fine-grained interpretation of the model calculations and show how semantic information propagates through the different layers of the network. The visualization of the information flow opens up possibilities to better understand the complex relationships within the models and to identify potential weaknesses or undesirable behaviors.

Furthermore, the paper demonstrates how the gained insights can be used for the targeted control of model behavior. By amplifying or suppressing certain features in the flow graphs, the text output of the model can be influenced. This allows for thematic control of the generated texts and opens up new application possibilities for LLMs, for example in the field of creative writing or targeted information generation.

The presented method represents an important step towards causal and cross-layer interpretability of language models. It not only provides a better understanding of the internal processes but also offers new tools for the transparent manipulation of LLMs. Further research into this approach could lead to improved control mechanisms and higher reliability of language models.

Research in this field is dynamic and promising. The development of new methods for analyzing and controlling LLMs is essential for the responsible use of this technology in the future. Particularly in the context of the growing importance of AI-based systems in various areas, from communication to decision-making, a deep understanding and precise control of the underlying models is indispensable.

Bibliographie: https://arxiv.org/abs/2502.03032 https://www.arxiv.org/pdf/2502.03032 http://paperreading.club/page?id=282305 https://openreview.net/pdf?id=vc1i3a4O99 https://huggingface.co/collections/gsarti/interpretability-and-analysis-of-lms-65ae3339949c5675d25de2f9 https://ieeevis.org/year/2024/program/papers.html https://openreview.net/forum?id=2XBPdPIcFK https://app.txyz.ai/daily https://www.linkedin.com/posts/nirmal-gaud-210408174_paper-activity-7256357605994860544-UbgY https://nips.cc/virtual/2024/papers.html ```.png)