Sparse Autoencoders Enhance Monosemantic Feature Learning in Vision-Language Models

Top post

Monosemantic Features in Vision-Language Models: Insights Through Sparse Autoencoders

Vision-Language Models (VLMs) have made remarkable progress in AI research in recent years. They enable computers to understand and process both images and text, leading to applications like image captioning, visual question answering, and visual search. A key factor in the success of these models is their ability to learn features that are both visually and semantically relevant. New research suggests that "sparse autoencoders" play a crucial role in learning "monosemantic" features in VLMs. These features each represent a single, unique meaning, contributing to a more robust and precise understanding of the visual world.

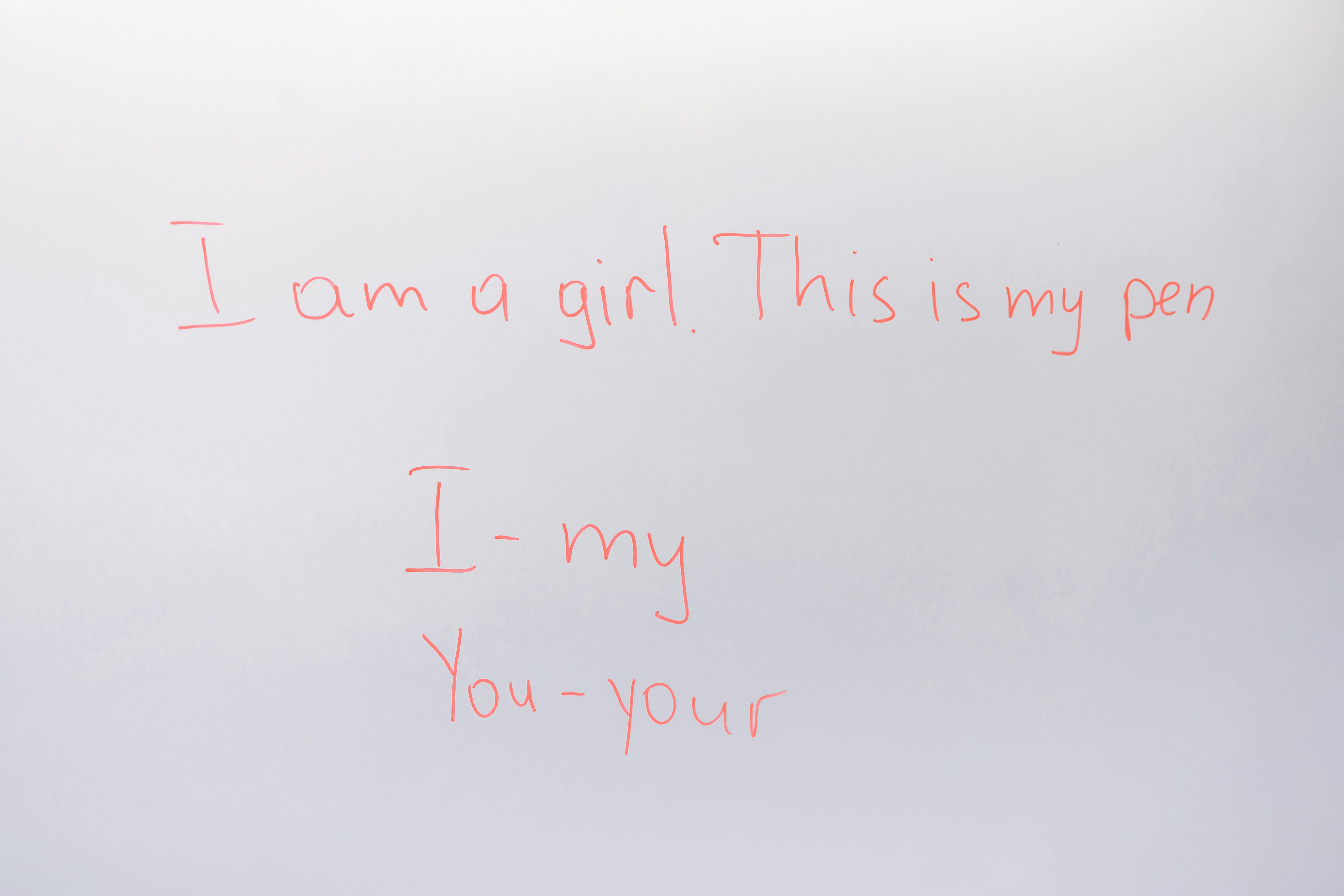

Traditional autoencoders learn compressed representations of data by passing the input through a bottleneck and then attempting to reconstruct the original input. Sparse autoencoders, on the other hand, enforce an additional constraint: they activate only a small fraction of the neurons in the network, creating a sparse representation of the data. This sparsity encourages the learning of features that are each responsible for a specific aspect of the input, as opposed to distributed representations where information is spread across multiple neurons. In the context of VLMs, this means that each activated neuron ideally corresponds to a single, well-defined concept – a monosemantic feature.

Research shows that these monosemantic features in VLMs lead to improved interpretability and robustness. Since each feature carries a specific meaning, researchers can better understand the model's decision-making process. This is particularly important for critical applications where transparency and explainability are essential. Furthermore, models based on monosemantic features are less susceptible to noise and variations in the input data. Because each feature represents a distinct meaning, the model is less vulnerable to irrelevant information or disturbances.

The discovery of monosemantic features in VLMs opens up new possibilities for developing more powerful and reliable AI systems. By leveraging sparse autoencoders, researchers can train models that develop a deeper understanding of the visual world and can handle more complex tasks. Future research could focus on optimizing the architecture of sparse autoencoders to improve the efficiency of the learning process and further enhance the interpretability of the learned features. The application of these findings could lead to advancements in areas such as medical image analysis, autonomous navigation, and robotics.

Mindverse, as a provider of AI-powered content solutions, is following these developments with great interest. The integration of monosemantic features into AI models could significantly enhance the capabilities of text and image generation tools, chatbots, and knowledge bases. The ability to extract precise and contextual information from visual and textual data is crucial for developing more intelligent and effective AI applications.

Bibliography: - https://arxiv.org/abs/2309.08600 - https://transformer-circuits.pub/2023/monosemantic-features - https://paperreading.club/page?id=297118 - https://transformer-circuits.pub/2024/scaling-monosemanticity/ - https://arxiv.org/html/2502.06755v1 - https://www.researchgate.net/publication/388882898_Sparse_Autoencoders_for_Scientifically_Rigorous_Interpretation_of_Vision_Models - https://openreview.net/forum?id=F76bwRSLeK - https://cdn.openai.com/papers/sparse-autoencoders.pdf - https://math.mit.edu/research/highschool/primes/materials/2024/DuPlessie.pdf - https://huggingface.co/papers/2309.08600.png)